StackOverflow.com is a widely used question and answer website for professional and enthusiast programmers. It is the flagship site of the Stack Exchange Network. The scale of this site can be understood by looking at its user base stats

With a hopping user base of 15million , Stack Overflow tops the chart. As per today overall Stack Exchange network gets 1.3 billion page views per month, that means 55 TB data / month. Designing the architecture of such a site network is a humongous task in itself.

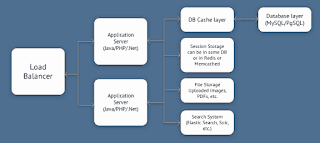

What is going to blow your mind ,Stack Exchange employs a two tier architecture, no sharding no Big Data. It’s fascinating that they run one of the most trafficked pages (that also uses long-polling “real-time” messaging) on just 25 servers, most of them on 10% load all the time.

Caching

- One is at Browser/Client level by caching images, javascripts,etc until their TTL(Time to live).

- At Load balancer level, load balancer serves cached requests instead of making another query to the webserver behind it. This caching results in improved response times for those requests and less load on the webserver.

- Via Redis, Redis Server caches data in-memory rather than frequent database hits. This caching is made highly consistent such that all data is in-memory & thus reducing database footprint. In-memory caching saves 10x time compared to databse hits .

- At database level, Sql Server also provides a level of internal caching to avoid frequent disk calls

- Using Elastic Search, which is an index server that powers quick searching. 90% of the queries are read only. This is the Read only server of Stackoverflow that help render pageviews real quick.

Scale Up

Scale out ,being the newest counterpart,is also the base of BigData & is proven to be performant for very large systems. Thats why everybody is rushing towards it . Scale out is Horizontally scaling, i.e. distributing the heavy jobs processing into multiple small nodes/machines.

Scale Up (Vertical Scaling) is increasing the machine's processing power(Memory,CPU) rather than adding more machines.

Why StackOverflow choose to Scale Up?

At StackOverflow, they believe 'Performance is a feature'. To get the best performance they maintain their own Servers and scale it up when needed.

When a new requirement comes up for more resources, they decide on these parameters :

- How much Redundancy do we need?

- Do we actually need to Scale Out?

- How much disk storage will be used?

- SSDs or Disk Drives

- How much Memory ?

- Memory intensive application?

- Parallel usage?

- What CPU?

- Cores based on parallelism

- Network, whats the transaction rate?

- How much Redundancy do we need?

Shared Nothing Architechture

- https://stackoverflow.com/company

- https://www.dev-metal.com/architecture-stackoverflow/

- https://mobisoftinfotech.com/resources/mguide/shared-nothing-architecture-the-key-to-scale-your-app-to-millions-of-users/

- https://www.infoq.com/news/2020/04/Stack-Overflow-New-Architecture/

- https://nickcraver.com/blog/2016/03/29/stack-overflow-the-hardware-2016-edition/